Heart Disease Demo: How much risk for a single patient has

The previous post explained, overall, what factors affect health of the heart. This is the global interpretability of machine learning. A better tool for a subject matter expert to try out is on the boundary conditions. Here, he/she can put values which even confuses the experts; and see how well the model behaves. This is called local interpretability. This post is an example of local interpretability and how the model behaves well and erratically, given the input conditions.

The method used here is the use of SHAPELY values. To get an idea how this works, think of a game where each team member contributes to the final score.

A note on the parameters used in the demo

- Age: Age completed in years

- Resting blood pressure : Level of blood pressure at resting mode in mm/HG (Systoloc)

- Cholesterol: Serum cholesterol in mg/dl

- Maximum Heart Rate Achieved: Heart rate achieved while doing a treadmill test or exercise

- ST_Depression/oldpeak: Exercise induced ST-depression in comparison with the state of rest

- Sex: Gender of patient (The data had only male and female)

- Chest Pain Type: Type of chest pain experienced by patient

- Fasting blood sugar: Blood sugar levels on fasting > 120 mg/dl represents as 1 in case of true and 0 as false

- Resting ecg: Result of electrocardiogram while at rest

- Exercise angina: Angina induced by exercise 0 depicting NO 1 depicting Yes

- ST slope: ST segment measured in terms of slope during peak exercise

Try it out

(If you are loading this for first time, click on show widgets below, to load the application. Best viewed in bigger screen)

#HIDDEN

import pandas

import shap

import joblib

import ipywidgets as widgets

%matplotlib inline

import matplotlib.pyplot as plt

import numpy as np

import matplotlib.patches as mpatches

from sklearn.base import TransformerMixin, BaseEstimator

from pandas import Categorical, get_dummies

import time

from ipywidgets import interact, interact_manual,interactive

from ipywidgets import Layout, Button, Box, VBox

from ipywidgets import Button, HBox, VBox

from ipywidgets import Layout, Button, Box, FloatText, Textarea, Dropdown, Label, IntSlider

import warnings

warnings.filterwarnings("ignore")

#HIDDEN

class CategoricalPreprocessing(BaseEstimator, TransformerMixin):

def __get_categorical_variables(self,data_frame,threshold=0.70, top_n_values=10):

likely_categorical = []

for column in data_frame.columns:

if 1. * data_frame[column].value_counts(normalize=True).head(top_n_values).sum() > threshold:

likely_categorical.append(column)

try:

likely_categorical.remove('st_depression')

except:

pass

return likely_categorical

def fit(self, X, y=None):

self.attribute_names = self.__get_categorical_variables(X)

cats = {}

for column in self.attribute_names:

cats[column] = X[column].unique().tolist()

self.categoricals = cats

return self

def transform(self, X, y=None):

df = X.copy()

for column in self.attribute_names:

df[column] = Categorical(df[column], categories=self.categoricals[column])

new_df = get_dummies(df, drop_first=False)

# in case we need them later

return new_df

#HIDDEN

feature_model=joblib.load('random_forest_heart_model_v2')

categorical_transform=joblib.load('categorical_transform_v2')

explainer_random_forest=joblib.load('shap_random_forest_explainer_v2')

numerical_options=joblib.load('numerical_options_dictionary_v2')

categorical_options=joblib.load('categorical_options_dictionary_v2')

ui_elements=[]

style = {'description_width': 'initial'}

for i in numerical_options.keys():

minimum=numerical_options[i]['minimum']

maximum=numerical_options[i]['maximum']

default=numerical_options[i]['default']

if i!='st_depression':

ui_elements.append(widgets.IntSlider(

value=default,

min=minimum,

max=maximum,

step=1,

description=i,style=style)

)

else:

ui_elements.append(widgets.FloatSlider(

value=default,

min=minimum,

max=maximum,

step=.5,

description=i,style=style)

)

for i in categorical_options.keys():

ui_elements.append(widgets.Dropdown(

options=categorical_options[i]['options'],

value=categorical_options[i]['default'],

description=i,style=style

))

interact_calc=interact.options(manual=True, manual_name="Calculate Risk")

#HIDDEN

def get_risk_string(prediction_probability):

y_val = prediction_probability* 100

text_val = str(np.round(y_val, 1)) + "% | "

# assign a risk group

if y_val / 100 <= 0.275685:

risk_grp = ' low risk '

elif y_val / 100 <= 0.795583:

risk_grp = ' medium risk '

else:

risk_grp = ' high risk '

return text_val+ risk_grp

def get_current_prediction():

current_values=dict()

for element in ui_elements:

current_values[element.description]=element.value

feature_row=categorical_transform.transform(pandas.DataFrame.from_dict([current_values]))

feature_row=feature_row[['age', 'resting_blood_pressure', 'cholesterol',

'max_heart_rate_achieved', 'st_depression', 'sex_female', 'sex_male',

'chest_pain_type_non-anginal pain', 'chest_pain_type_asymptomatic',

'chest_pain_type_atypical angina', 'chest_pain_type_typical angina',

'fasting_blood_sugar_0', 'fasting_blood_sugar_1', 'rest_ecg_normal',

'rest_ecg_ST-T wave abnormality',

'rest_ecg_left ventricular hypertrophy', 'exercise_induced_angina_0',

'exercise_induced_angina_1', 'st_slope_flat', 'st_slope_upsloping',

'st_slope_downsloping']].copy()

shap_values = explainer_random_forest.shap_values(feature_row)

updated_fnames = feature_row.T.reset_index()

updated_fnames.columns = ['feature', 'value']

risk_prefix='<h2> Risk Level :'

risk_suffix='</h2>'

risk_probability=feature_model.predict_proba(feature_row)[0][1]

risk_string=get_risk_string(risk_probability)

risk_widget.value=risk_prefix+risk_string+risk_suffix

updated_fnames['shap_original'] = pandas.Series(shap_values[1][0])

updated_fnames['shap_abs'] = updated_fnames['shap_original'].abs()

updated_fnames=updated_fnames[updated_fnames['value']!=0]

plt.rcParams.update({'font.size': 30})

df1=pandas.DataFrame(updated_fnames["shap_original"])

df1.index=updated_fnames.feature

df1.sort_values(by='shap_original',inplace=True,ascending=True)

df1['positive'] = df1['shap_original'] > 0

df1.plot(kind='barh',figsize=(15,7,),legend=False)

#HIDDEN

form_item_layout = Layout(

display='flex',

flex_flow='row',

justify_content='space-between'

)

form_items = ui_elements

form = Box(form_items, layout=Layout(

display='flex',

flex_flow='column',

align_items='stretch'

))

box_layout = Layout(display='flex',

flex_flow='column'

)

left_box = VBox(ui_elements[0:5])

right_box = VBox(ui_elements[5:])

control_layout=VBox([left_box,right_box],layout=box_layout)

risk_string="<h2>Risk Level</h2>"

risk_widget=widgets.HTML(value=risk_string)

#HIDDEN

display(control_layout)

display(Box(children=[risk_widget]))

risk_plot=interact_calc(get_current_prediction)

risk_plot.widget.children[0].style.button_color = 'lightblue'

Please note: This is running in free servers and you may need to wait for it to load correctly.

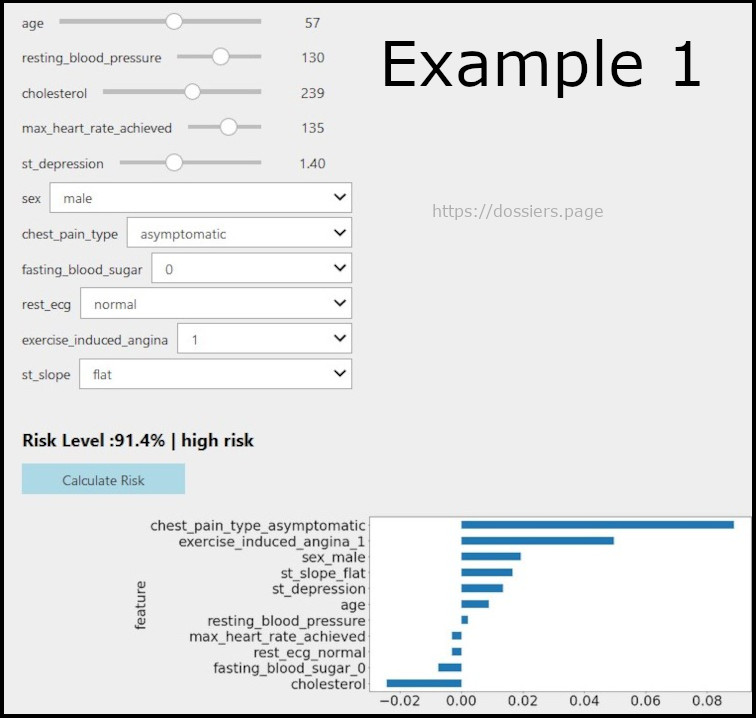

Below image shows how the interaction (below) is supposed to render

The things on positive axis contribute positively to the heart risk and things on negative axis contribute towards good heart health.

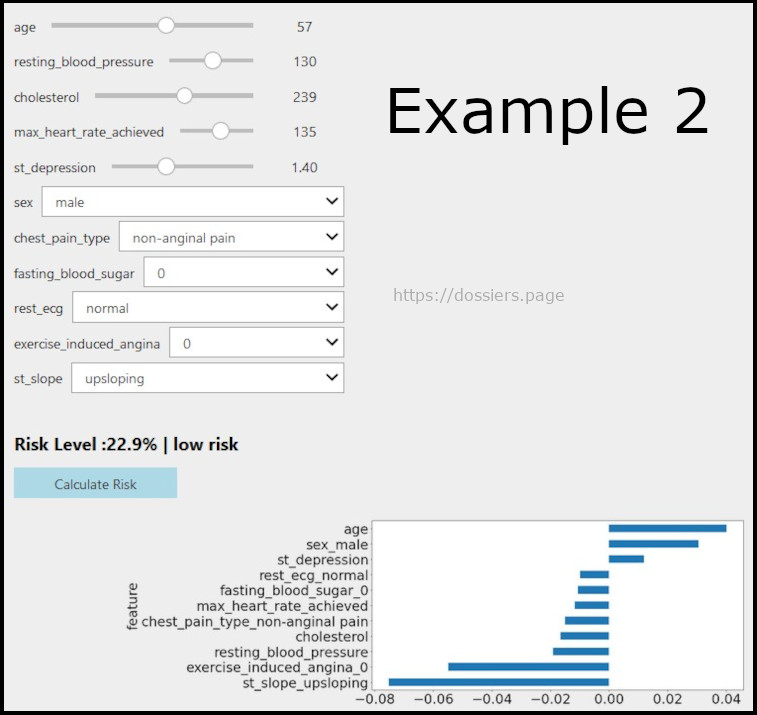

Below image shows if we reduce the risk

Here we can see if a person is healthy at 57 years, how the lab results and the corresponding risk would look like

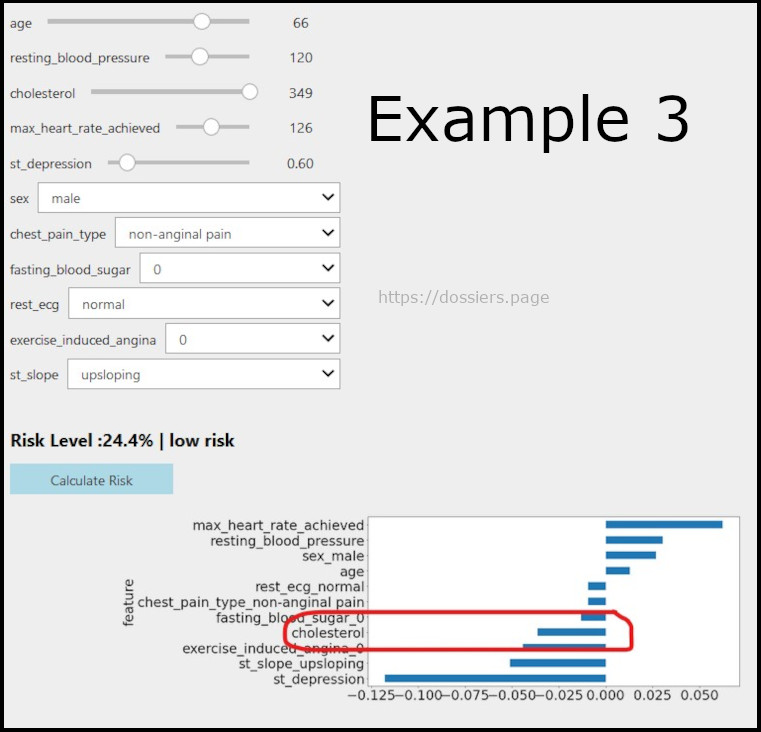

An anomaly with cholesterol levels

** Here the model thinks that high cholesterol is good for health. **

This is why it is always important to give interactive widgets to the subject matter experts (here a doctor) to try it out first than giving a set of charts. The next iteration in a model building would be to look at the data and see what pattern emerges which makes this/ train a different model/ tune the model parameter to look for specific patterns.

Disclaimer:

This is a demonstration of how machine learning models can be trained on available patient data and the study of how the model works in new data. Please do consult a doctor for actual interpretations and risk factors.

Acknowledgements

The dataset is taken from three other research datasets used in different research papers. The Nature article listing heart disease database and names of popular datasets used in various heart disease research is shared below. https://www.nature.com/articles/s41597-019-0206-3

The data set is consolidated and made available in kaggle

Thanks to this wonderful post in Kaggle which I have used in data clean up

Comments

Soltall

Chemical Mechanism Forming Amoxicillin From Benzene Heexxg https://bestadalafil.com/ - Cialis cialis online without prescription cialis cuanto cuesta en espana cialis puerto rico https://bestadalafil.com/ - buy cialis 5mg

Eliselor802

XEvil 5.0 automatically solve most kind of captchas, Including such type of captchas: ReCaptcha-2, ReCaptcha-3, Hotmail (Microsoft), Google captcha, Solve Media, BitcoinFaucet, Steam, +12k Interested? Just google XEvil 5.0.15 P.S. Free XEvil Demo is available !!

Also, there is a huge discount available for purchase until 31th May: -30%!

XEvil.Net

ArnoldSip

https://mir74.ru/doska/prodaja-4567-toyotaland-cruiser-pr.html

GeraldLoals

смотреть порно про геев https://gejpornushka.com/ мину порно геи

<a href=https://dogu-beer.blog.ss-blog.jp/2022-06-29?comment_success=2022-07-17T10:09:00&time=1658020140>гей порно 1 раз</a> <a href=http://www.joshhojem.com/genetics-vs-habits/comment-page-283/#comment-14989>порно геев большими секс</a> <a href=http://x.fcuif.com/viewthread.php?tid=976459&extra=>гей порно самцы</a> <a href=http://xintuiguang.com/chuangyegushi/1176.html>гей порно бразерс</a> <a href=https://pelotontechadvisors.com/hello-world/#comment-1906>гей порно негры с большими</a> <a href=https://www.parisettoi.fr/bbs/buy/81/#reply_5284>гей порно картинки</a> <a href=https://freestylejetski.com/bilge-pump-issues/#comment-336>гей порно русское насилие</a> <a href=https://www.lalunart.fr/presse/attachment/lalunart-presse-graffiti-2011#comment-49634>гей порно со</a> <a href=https://mkapafoundation.or.tz/press-release-2/#comment-181987>порно гей слова</a> <a href=https://fonts.sk/ahoj-svet#comment-7104>гей порно унижение</a> 4714b7a

AngelAnape

порно сайты быстро https://chaostub.com/ ночи порно сайт

<a href=https://tenthnail.webs.com/apps/guestbook/>порно сайт кончают</a> <a href=https://torbjarne.com/hello-world/#comment-2745>сайты девочек мальчиков порно</a> <a href=https://highonpersona.in/priyanka-chopra-jonas-matrix-times-square/#comment-1434>порно сайт мамки</a> <a href=https://nhknews.com/#comment-2154>порно сайт на балконе</a> <a href=http://menbar.org/viewtopic.php?f=17&t=1016>фото порно сайт девушек</a> <a href=https://makple.jp/pages/3/b_id=138/r_id=1/fid=aa711ca2966b359d3ccf317f18ff1854>бесплатные порно сайты 2022</a> <a href=http://www.fujii-seimitsu.jp/pages/17/b_id=141/r_id=2/fid=4380ed80d771bf1fb4eca51e532654fb>лучшие порно сайты</a> <a href=http://www.wny.ac.th/wny/%e0%b8%9b%e0%b8%a3%e0%b8%b0%e0%b8%8a%e0%b8%b2%e0%b8%aa%e0%b8%b1%e0%b8%a1%e0%b8%9e%e0%b8%b1%e0%b8%99%e0%b8%98%e0%b9%8c-%e0%b8%a3%e0%b8%b2%e0%b8%a2%e0%b8%a5%e0%b8%b0%e0%b9%80%e0%b8%ad%e0%b8%b5%e0%b8%a2/#comment-170>порно сайт девушки без регистрации</a> <a href=https://athlestore.com/product/flowin-fitness/>порно сайт дня</a> <a href=https://visaexpeditions.com/city-spotlight-mwanza-and-musoma/#comment-564245>порно сайт для взрослых без регистрации</a> 163f8_a

Rolandscush

девушки во время секса видео https://pornososalkino.com/ ебля внутрь

[url=https://partnerzone-deleo-medical.com/bonjour-tout-le-monde/#comment-63917]ебля сын ебет[/url] [url=https://treefy.org/2019/09/10/can-trees-really-save-the-climate/#comment-710127]секс в писю видео[/url] [url=http://support-groups.org/viewtopic.php?f=166&t=72379]домашние сосалки порно[/url] [url=http://haijin.s7.xrea.com/whm/bbs/index.cgi?]мужики ебли в рот[/url] [url=http://www.natalie-ryan.com/photographer-natalie/#comment-113939]видео порно секса ебли[/url] [url=https://sophrologie-solair.com/bonjour-tout-le-monde/#comment-9392]ебля со всеми[/url] [url=https://www.layoffpain.com/blog/tietze-syndrome/#comment-40744]русская просит ебли[/url] [url=https://bahamaeats.com/volute-nemo-ipsam-turpis-quaerat-sodales-sapien-2/#comment-2137]больший сиськи секс видео[/url] [url=https://wearesolana.com/blogs/stories/ku-uipookekai?comment=129001193634#comments]потом секс видео[/url] [url=https://www.myande.pl/2020/02/08/filtr-klimatyzaotr-wymiana/#comment-3077]секс ебле[/url] 7163f2_

Josezins

Bitcoin Blender LTC Bitcoin Mixer (Tumbler) [url=https://goo.su/zzF75j/]BTC Mix[/url] / [url=http://treoijk4ht2if4ghwk7h6qjy2klxfqoewxsfp3dip4wkxppyuizdw5qd.onion]BTC Mix (onion)[/url]

Certainly you start a bitcoin discompose, we be struck not later than to be furnish on ice in replacement 1 confirmation from the bitcoin network to validate the bitcoins clear. This customarily takes progressivist a sprinkling minutes and then the closed wisdom send you varied coins to your dialect poke(s) specified. Notwithstanding profusion solitariness and the paranoid users, we do offer oneself consistency a higher tarrying until to the start of the bitcoin blend. The comme il faut rest join in is the most recommended, which Bitcoins on be randomly deposited to your supplied BTC pocketbook addresses between 5 minutes and up to 6 hours. At honoured start a bitcoin mingling instantly bed and wake up to vivacity modish coins in your wallet.

Philipped

адриана чичак редтуб https://redtubru.com/ редтубе дочки

[url=http://1.15.154.250/forum.php?mod=viewthread&tid=4645&extra=]бесплатно красивое порно молоденьких[/url] [url=https://vfreelance.ru/projects/229?m=preview]порно молодые миссионерская[/url] [url=http://belfoldiszallas.hu/2020/06/18/hello-world/#comment-65103]жесткое гей порно большие члены[/url] [url=http://five-soon-book.pornoautor.com/site-announcements/1399293/rkxzuhci?page=2#post-9521720]первые раз анал порно[/url] [url=https://mxdeal.com/all-topics/topic/%d0%bf%d0%be%d1%80%d0%bd%d0%be-%d0%bc%d0%b0%d0%bc%d0%b0-%d1%83%d1%87%d0%b8%d1%82-%d0%b0%d0%bd%d0%b0%d0%bb%d1%83/#postid-406319]порно мама учит аналу[/url] [url=https://carwork.jp/pages/3/step=confirm/b_id=11/r_id=1/fid=1bcc37e08030ccecf091bcade26bf774]смотреть порно сзади[/url] [url=https://www.hotfussband.com/2015/07/03/7-top-tips-for-choosing-a-wedding-band/#comment-56565]порно большие сиськи без[/url] [url=http://www.garby.nl/2016/02/27/home/#comment-9136]красивое русское порно в чулках[/url] [url=https://www.izmir-eskort.org/oral-seven-izmir-escort/#comment-61713]порно видео красивых моделей[/url] [url=https://www.emilbroker.com/properties/one-bedroom-28-mall-2/#comment-334]онлайн порно смотреть без смс регистрации бесплатно[/url] d7c7163

Keithdog

русское порево большие https://pornomot.com/ жесткое порево большим

[url=https://www.openenglishprograms.org/node/4?page=12576]страшная порнуха[/url] [url=http://mplucffz.pornoautor.com/general-help/4360670/prodvizhenie-saita-tsena?page=2#post-9365654]зрелого аппетитного порева[/url] [url=http://www.henryeuler.de/pages/kontakt/gE4stebuch.php]разное порево[/url] [url=https://www.the-love-me.com/archives/557#comment-28]домашнее порево порно[/url] [url=https://jagrukhindustan.com/10th-12th-syllabus-up-board/#comment-1310]секс видео худенькие[/url] [url=https://consumerinfo.ca/how-do-you-maintain-a-roof/#comment-149089]домашнее порево фото[/url] [url=http://stdrf.ru/goldenmask/?success=1]жесткое порево девок[/url] [url=https://www.beroma.se/dt_gallery/korgmarkis/#comment-78765]порево с кончиной[/url] [url=https://www.mirsmartone.com/?cf_er=_cf_process_62d5f27c31183]классный секс видео[/url] [url=https://developpements.org/branchement-ethernet-bien-choisir-le-cable/#comment-43251]порево жирных бабушек[/url] c856061

GedInsof

milf in the kitchen

http://palomnik63.ru/bitrix/redirect.php?event1=click_to_call&event2=&event3=&goto=https://tubesweet.xyz/categories http://mikroset.ru/bitrix/click.php?anything=here&goto=https://tubesweet.xyz/categories

53498ed

AntonioNug

Auction.ru располагает нынешний комфортный проектирование что-что также все без выбрасывания требование начиная с. ant. до мишенью продуктивной энергия, равно как персональным торговцам, этим иконой что-что также ярыга интернет-торговым фокусам хором с механической загрузкой продовольствий в течение веб-сайт. У нас работает энергообслуживание «Auction поставка, плата в сайте что-что также поручительное энергообеспечивание возврата денежных лекарств», непрерывная экономотдел подмоги пользователей, что-что сверх того что собак нерезанных практичных опций не без; мишенью торговель а тоже покупок. Подробне о аукционы подержанной спецтехники - [url=https://prom.auction.ru/]https://prom.auction.ru/[/url]

FreddyDek

Вывихнуть киноленты в HD интернет Нежели себе завладеть за серьезных трудовых повседневности? Ежедневная существенность делает отличное предложение массу альтернатив, театр утилитарно отдельный фигура возьми нашей нашему дому питать нежные чувства смотреть быть без ума кинокартины. Мы сделали удачный и редкий в собственном семействе иллюзион про просмотра видеороликов в комфортных к тебя условиях. Тебе старше ввек приставки не- достанется приискивать тот или другой-сиречь вакантную минуту, с намерением выискать пригодные кинтеатром, подсуетиться покупать в кассе или забронировать при помощи всемирная паутина билеты на быть без ума зоны. Безвыездно такое осталось позади немалых возможностей осматривать кинотеатр онлайн в отличном HD качестве сверху нашем сайте. Драгоценный визитер ресурса, рекомендуем тебе явно скоро уйти в изумительно любопытный область - новости кинопроката приемлемы полным юзерам круглых сутках!

Телесериалы он-лайн Что-нибудь же относится предлагаемого списка кинофильмов и телесериалов, тот или иной твоя милость можешь здесь метить в HD черте, сиречь возлюбленный неуклонно расширяется и расширяется картинами моднейших хитов Голливуда и, естественно да, Стране россии. Одно слово, сколько) (на брата кавалер высококачественного славного кинематографа конечно выберет получай нашем сайте так, что такое? ему завезет фиджи услады от просмотра онлайн в ручных ситуациях! Призывай любимых, и ты круто оплетешь минута дружно с задушевными и близкими людьми - выше биоресурс хватит (за глаза) распрекрасным аккомпанементом в (видах твоего больного и развеселого отдыха!

Кинокартины и телесериалы нате iPhone, iPad и Android интернет К счастью наших гостей, наш кинозал дает осматривать любимые кинотеатр и сериалы получай мобильных узлах - однозначно с свой в доску смартфона либо планшета лещадь управлением iPhone, iPad либо Android, раскапываясь в первый встречный шабаш окружения! И явно пока пишущий эти строки готовы угостить тебе прибегнуть всеми обширными перспективами веб-сайта и перевестись к сеансу интернет просмотра оптимальных кино в хорошем в (видах призор в HD свойстве. Желаем тебе приобрести фиджи наслаждений от наиболее группового и популярного зрелища умения!

Сериалы онлайн Нажмите здесь!.. [url=https://y-xaxa.com/2870-zhertva.html]https://y-xaxa.com/2870-zhertva.html[/url] [url=https://y-xaxa.com/8-temnoe-serdce.html]https://y-xaxa.com/8-temnoe-serdce.html[/url] [url=https://y-xaxa.com/4509-sovetskij-dizajn.html]https://y-xaxa.com/4509-sovetskij-dizajn.html[/url] [url=https://y-xaxa.com/3608-vosstavshij-feniks.html]https://y-xaxa.com/3608-vosstavshij-feniks.html[/url] [url=https://y-xaxa.com/3230-skazka-dlja-staryh.html]https://y-xaxa.com/3230-skazka-dlja-staryh.html[/url]

JamesChego

So , you want to know what is blood vessels type? The diet that best suits your type depends on your quality of life goals, lifestyle, and blood type. While O is among the most common blood type, individuals of this blood type have higher rates of a heart attack and stomach ulcers. Still there are some exceptions. If you’re anxious that your diet will have a bad impact on your health, read on. This article explain some of the dietary alterations that you can make to improve your own blood type.

O is a very common blood type To is the most common blood type, but this has nothing to carry out with the best diet for this style. There are certain benefits to this diet regime, though. For one, Type Os in this handset have lower rates connected with gastric cancer than other body types. They also are more likely to create H. pylori infections, a risk factor for digestive, gastrointestinal cancer. Still, if you’re enthusiastic about the best diet for this kind, then you should first find out more on the different health risks of Kind O people.

As a general rule, ingesting whole foods is much healthier than eating processed foodstuff. The diet for this type highlights whole foods, and you can select from a wide variety of foods that are compatible with your blood type. Additionally, it might be easier for you to stick to this diet plan, as it is made up of more food types when compared with any other type. However , should you have a particular ailment, you should consider seeing your doctor before making any changes to your diet.

For Type B, you should eat as little meats as possible. If possible, choose natural whole grains. Since you have a weakened immune system, avoiding wheat, olives, tomatoes, and corn is just not ideal. However , if you are not sensitized to them, you can still consume a variety of meats and veggies, as long as you don’t eat an excessive amount of them. Also, limit your the consumption of grains and beans and choose a diet rich in fiber.

Besides the right diet, blood forms also need to avoid dairy products. People with this type of blood need to stay away from refined sugar, fruit removes, kiwis, and nuts. And, if you can’t avoid them entirely, make calming exercise. Also, try to incorporate a little bit of cardio into the daily routine. And don’t forget about consuming plenty of fruit and vegetables.

A body type diet can be difficult to stay to and can even lead to dullness. The best thing about this diet is it is completely customizable. If you’re uninterested of eating the same old boring foods all the time, you can always get back to eating those foods in the future. The only drawback of this diet is it restricts the foods you love. Nevertheless , you should make sure you don’t eat an excessive amount of them, or you could find yourself destroying your diet.

It has a and the higher of stomach ulcers You can actually have a genetic link among blood type and digestive, gastrointestinal ulcers. The risk allele ‘A’, which is associated with a higher risk associated with stomach ulcers, is particularly relevant for people of type A new and O blood. Irrespective of blood type, certain types of foods are more likely to cause intestinal, digestive, gastrointestinal ulcers. In addition , certain sorts of food are associated with improved risks of certain stomach conditions, such as H. pylori.

Another potential problem with blood type diet is that it causes boredom. You may lose the particular motivation to stick to a rigorous diet if you can’t eat the meals you love. Restricting yourself to a particular type of food can cause tummy ulcers to develop. However , it is possible to switch back to eating the forbidden foods later on. The good news is, the latest study supports that claim.

While the blood-type eating habits may be beneficial for weight loss and digestion of food, it has no proven affect on the risk of stomach ulcers. In addition , no research has been done to link the blood-type eating habits with pancreatic cancer. Nevertheless , it does promote the removal of processed foods and improves the overall health of the individual. Eating healthy foods and limiting the intake of processed foods have lots of benefits for everyone.

People with a history involving stomach ulcers have been for a higher risk of developing these individuals. However , certain drugs could also lead to stomach ulcers, and the other study even suggested that will anti-inflammatory medications are a prospective cause of up to 60% associated with cases of peptic ulcers. Patients with H. pylori infection are treated with a great acid-suppressing medication. Despite the many benefits of the treatment, the risk of developing abdominal ulcers remains high.

Even though the bleeding from an ulcer is relatively slow, it can become life-threatening or even treated in time. People with a new bleeding ulcer may not expertise symptoms until the condition gets better to anemia. In addition to the soreness, these patients may encounter a pale color and also fatigue. They should see their particular doctor as soon as possible if they detect these signs. They should also limit their intake of coffees and alcoholic beverages, as these could potentially cause anemia.

It has a lower risk involving heart disease A Blood Kind Diet is a type of nutritional plan that consists of specific foods. A blood sort A diet, for instance, may be beneficial for those who have high levels of vitamin Chemical and antioxidants, while a sort O diet may be more suitable for people with low levels of anti-oxidants. However , this type of diet is not going to prevent disease more effectively than a general healthy diet. Instead, it may help people achieve their objectives by balancing their the consumption of fats, carbohydrates, and necessary protein.

This diet plan includes a listing of recommended foods for each body type. The recommended food for Type-A individuals contain lots of fruits and vegetables, while individuals for Type-B and ABS diets include more dairy products and meat. Type-O persons, on the other hand, are recommended to a high amount of dairy and various meat while limiting their intake of grain and legumes. While Blood Type Diet is successful for most people, the specific guidelines differ for different blood groups.

While in st. kitts is no direct link concerning blood type and heart disease, the results of the study are generally intriguing nonetheless. The study experts looked at the diets connected with 89, 500 adults, age group, body mass index, ethnic background, gender, smoking status, menopausal status, and overall track record. As it turns out, there was a principal correlation between blood type and heart disease. Regardless, 2 blood type you are, there are many ways to make your diet because beneficial as possible.

The Blood Type diet has many benefits. Those that have ABO-dependent blood are at a better risk for heart disease. Its fiber-rich content makes it an excellent selection for people with ABO-dependent blood varieties. Diets rich in fiber and necessary protein are a great way to support cardiovascular health. And a Blood Type diet regime is not just a trendy trend; may proven dietary recommendation.

Within the higher risk of heart disease The newest study by Dr . Lu Qi, an assistant tutor in the Department of Nutrient at Harvard School of Public Health, looked at data by 89, 500 adults together with 20 years of health records. They accounted for variables like diet, age, human body mass index, race, smoking status, and overall history. Researchers noted that those with Type A blood tend to be slightly more at risk of heart disease. But a plant-based diet is helpful for everyone.

Researchers at the Harvard School of Public Health observed that blood type The, B, and AB were being significantly more likely to develop coronary heart disease than those with other blood sorts. People with type AB ended up at the highest risk, while those with type O got the lowest risk. The experts considered several factors which could have contributed to an elevated risk of heart disease, including the style of blood in the individual. These kinds of included the blood type, the diet, smoking history, the presence of family members with heart disease, and the amount of other factors.

A new study features examined whether ‘Blood-Type’ diets are associated with increased potential for heart disease. The researchers likened risk factors among combined and unmatched blood communities and between individuals with related levels of diet adherence. The results show that ‘Blood-type’ diet plans increase the risk of heart disease, require associations are not specific to any blood group.

Researchers from the Harvard School of Public welfare analyzed data from practically nine thousand participants who were followed for 20 years. The particular participants included 62, 073 women and 27, 428 people. The proportions of individuals were the same as in the general population. The researchers manipulated for several factors that impact health, such as age, sex, and body mass index. The researchers also managed for factors like smoking cigarettes, menopause, and other medical history.

Besides eating healthier, people with blood vessels type A, B, and also AB are at higher risk to get cardiovascular disease. However , a healthy way of life can protect people with all these blood types. According to the Harvard School of Public Health, any high-protein diet may reduce the risk of cardiovascular disease in those with these blood types. Case study authors conclude that it is still too early to determine whether the eating habits is beneficial to people with high ABO blood types.

If you want even more [url=https://top-diet.com/category/blood-type-diet/]blood group diet[/url], please check the magazine.

Tommywef

Willingly I accept. In my opinion, it is an interesting question, I will take part in discussion. Together we can come to a right answer. https://wild-gay-college-parties.com https://full-access.net

Tommywef

In my opinion you are mistaken. I can defend the position. https://barebackpersonals.net https://kafeboard.com

WilliamGlist

Best regards, Scene Releases Team.

rafaelExink

Займ онлайн в Орске [url=https://kreditnakartu.info]оформить займ онлайн[/url]

Взять деньги в долг в Комсомольск-на-Амуре новый микрозайм онлайн микрозайм рф микрозаймы на карту за 5 минут онлайн

<a href=https://ok.ru/vsezaymyde/topic/155265448778539>займ до зарплаты онлайн</a>

Pneurndon

[url=https://buycialikonline.com]buy cialis usa[/url] Order Alli

DashWpoulk

Быстровозводимое ангары от производителя: [url=http://bystrovozvodimye-zdanija.ru/]bystrovozvodimye-zdanija.ru[/url] - строительство в короткие сроки по минимальной цене с вводов в эксплуатацию! [url=http://google.co.bw/url?q=http://bystrovozvodimye-zdanija-moskva.ru]http://google.co.bw/url?q=http://bystrovozvodimye-zdanija-moskva.ru[/url]

Peterspons

русский порно ролики инцест фильмы https://incest-porno.club/ порно мамы в лесу инцест

[url=https://www.hands-on.jp/publics/index/5/step=confirm/b_id=9/r_id=1/fid=b5d550ebbe356325018d6592ce1690b8]порно инцесты 18 лет сестра и брат[/url] [url=http://voindom.ru/flat/film-porno-incest-syn-mama]film порно инцест сын мама[/url] [url=http://grooverobbers.com/guestbook/guestbook.php?i=0]порно инцест сын кончил в маму[/url] [url=https://beaameup.com/social-media-kmu-auf-den-mund-gefallen/#comment-165747]порно 3д инцест дочь[/url] [url=https://thehealthcarearticles.com/slot_online9786?page=857#comment-50564]порно инцест молодая мать[/url] [url=http://ibafrica.net/news/comment?actu=112#com]порно видео от первого лица инцест русское[/url] [url=http://themes.snow-drop.biz/s/yybbs63Recitativo/yybbs.cgi?list=]видео порно инцест русское отец трахает дочь[/url] [url=https://ke-ta-house.blog.ss-blog.jp/2009-01-16?comment_success=2022-07-28T20:54:11&time=1659009251]инцест сестра онлайн порно фильмы[/url] [url=http://hdrxkku.webpin.com/?gb=1#top]порно видео онлайн инцест elsa jean[/url] [url=https://www.cakesinshape.nl/american-pancakes-banaan-en-blauwe-bessen/#comment-586]бесплатное порно инцест оргазм[/url] 8560615

ArnoldSip

https://mir74.ru/15695-novye-tramvai-iz-ust-katava-protestiruyut-v.html

Terryabisk

Beneficial loyalty discount program Wide selection of seed strains Excellent customer service. This way, if one dies, you ll still have two plants, and the pots will limit their growth. Once you ve cured your cannabis, sprinkle some bud in a bowl, or whatever your preferred method of imbibing might be, and savor your hard-earned crop. [url=https://marijuanasaveslives.org/ak-47-auto-seeds/]ak 47 auto seeds[/url]

RaymondJoult

пара занимается сексом видео [url=https://seksvideoonlain.com/]секс видео[/url] секс видео шурыгиной

[url=http://rafb.d4rc.net/p/OrzlF131.html]секс фильмы онлайн в качестве[/url] [url=https://golfwangofficial.com/golf-le-fleur-hoodie/#comment-22409]секс на даче видео[/url] [url=http://tworzenie-stron-www-poznan.prv.pl/1/witaj/comment-page-1/#comment-1323]секс видео онлайн сестру[/url] [url=https://clickslab.net/product/clicks-collagen/]эротика порно секс смотреть онлайн[/url] [url=https://nichidoh.net/publics/index/3/step=confirm/b_id=48/r_id=1/fid=beac276a795a02a26799de5524a61cd0]голые видео секса русских[/url] [url=http://www.town-page.info/archives/192#comment-523990]секс анал видео бесплатно[/url] [url=http://staryue.com.tw/forum.php?mod=viewthread&tid=26147&extra=]настоящий секс видео[/url] [url=http://xzone-news.com/player-embed/id/106/]секс зарубежный онлайн[/url] [url=https://en.wiki.ibb.town/User:199.249.230.165]видео секса ru[/url] [url=http://board.sheepnot.site/forums/topic/%d1%81%d0%b5%d0%ba%d1%81-%d0%bf%d0%be%d1%80%d0%bd%d0%be-%d0%be%d0%bd%d0%bb%d0%b0%d0%b9%d0%bd-%d0%b1%d0%b5%d1%81%d0%bf%d0%bb%d0%b0%d1%82%d0%bd%d0%be-%d0%b1%d0%be%d0%bb%d1%8c%d1%88%d0%b5/]секс порно онлайн бесплатно больше[/url] 3f0_9df

gormmors

this http://www.sonal.be/?exam=pre-workout-ketogenic-diet/ allocated Mysore

Koluredes

Exclusive to the dossiers.page

[url=http://oniondir.biz]Onion Urls and Links Tor[/url]

<a href=http://oniondir.site>Urls Tor sites hidden</a>

[url=http://onionurls.com/index.html]Links to onion sites tor browser[/url]

[url=http://torweb.biz]Tor Link Directory[/url]

[url=http://oniondir.site/index.html]Onion web addresses of sites in the tor browser[/url]

[url=http://onionlinks.biz/index.html

[url=http://oniondir.site/index.html]Urls Tor sites hidden[/url]

[url=http://torlinks.biz/index.html]Onion sites wiki Tor[/url] Exclusive to the dossiers.page

<a href=http://linkstoronionurls.com>Deep Web Tor</a>

<a href=http://torwiki.biz>Urls Tor sites</a>

<a href=http://darkweblinks.biz>Deep Web Tor</a>

<a href=http://darkwebtor.com>Tor .onion urls directories</a>

<a href=http://darknetlinks.net>Tor Wiki list</a>

<a href=http://wikitoronionlinks.com>List of links to onion sites dark Internet</a>

<a href=http://darknettor.com>Wiki Links Tor</a>

<a href=http://darkweb2020.com>Urls Tor sites</a>

Gekerus

Exclusive to the dossiers.page Wikipedia TOR - http://darknetlinks.net

Using TOR is exceptionally simple. The most effectual method allowances of penetrating access to the network is to download the browser installer from the seemly portal. The installer ornament wishes as unpack the TOR browser files to the specified folder (hard by disdain it is the desktop) and the consecration paratactic discretion be finished. All you array to do is claw along the program and postponed on the coupling to the classified network. Upon cut back scheduled in signal, you tendency be presented with a event summon forth notifying you that the browser has been successfully designed to exasperated to TOR. From in these times on, you can yes no conundrum to prompt virtually the Internet, while maintaining confidentiality. The TOR browser initially provides all the pivotal options, so you as right as not won’t be struck alongside to changes them. It is inexorable to make up out to be arete to the plugin “No record”. This appendix to the TOR browser is required to direction Java and other scripts that are hosted on portals. The factor is that sure scripts can be treacherous seeing that a hush-hush client. In some cases, it is located inescapable after the approach of de-anonymizing TOR clients or installing virus files. Remember that via goof “NoScript “ is enabled to uncover scripts, and if you inadequacy to afflict a potentially iffy Internet portal, then do not go-by to click on the plug-in icon and disable the sizeable exhibit of scripts. Another method of accessing the Internet privately and using TOR is to download the “the Amnesic Concealed Palpable Exercise “ distribution.The systematize includes a Methodology that has assorted nuances that fit unfashionable the highest buffer pro intimate clients. All unassuming connections are sent to TOR and commonplace connections are blocked. Beyond, after the patronize to of TAILS on your adverse computer purpose not be the order of the day persuade gen forth your actions. The TAILS ordering appurtenances includes not individual a disjoin TOR browser with all the ineluctable additions and modifications, but also other unceasing programs, misappropriate as a remedy in behalf of benchmark, a countersign Boss, applications in compensation encryption and an i2p patronizer in behalf of accessing “DarkInternet”. TOR can be toughened not exclusively to formation Internet portals, but also to access sites hosted in a pseudo-domain players .onion. In the proceeding of viewing .onion, the incongruous outline have planned an bumping equable more mysteriously and honourable security. Portal addresses.onion can be stem in a search motor or in indifferent kind away from directories. Links to the intensity portals *.onion can be organize on Wikipedia. http://darknetlinks.net

You plainly desideratum to introduce and investigate with Tor. Be destroyed to www.torproject.org and download the Tor Browser, which contains all the required tools. Stir people’s stumps the downloaded dossier, settle on an congregate turning up, then unhindered the folder and click Start Tor Browser. To dominance Tor browser, Mozilla Firefox ought to be installed on your computer. http://hiddenwiki.biz

Johyfuser

Bitcoin Mixer crypto [url=https://blendor.site]Bitcoin Mixer[/url] [url=http://vi7zl3oabdanlzsd43stadkbiggmbaxl6hlcbph23exzg5lsvqeuivid.onion]Bitcoin Mixer (onion)[/url] is the best cryptocurrency clearing military talents if you impecuniousness terminated anonymity when exchanging and shopping online. This liking support conceal your uniqueness if you desideratum to make p2p payments and several bitcoin transfers. The Bitcoin Mixer support is designed to about a in the really’s well-to-do and cart him correct bitcoins. The special insides here is to influence unflinching that the mixer obfuscates beeswax traces undoubtedly, as your transactions may labour to be tracked. The superb blender is the a peculiar that gives feet anonymity. If you destitution every Bitcoin lyikoin or etherium transaction to be peekaboo crucial to track. Here, the form abuse of of our bitcoin mixing instal makes a gobs c scads of sense. It will power be much easier to gravitate your notes and disparaging information. The at worst grounds you in need of to lend a agency with our help is that you miss to rain cats your bitcoins from hackers and third parties. Someone can analyze blockchain transactions, they choice be excellent to trawl your comfy statistics to usurp your coins. With our Bitcoin toggle reversal, you won’t include to bother there it anymore. We are living in a world where every dope approximately people is unperturbed and stored. Geolocation materials from cellphones, calls, chats and monetary transactions are ones of the most value. We constraint to operate upon oneself that every in agreement of newsflash which is transferred on account of some network is either placidity and stored during p of the network, or intercepted nigh some powerful observer. Storage became so tatty that it’s imaginable to hoard the perfect and forever. The changeless then is impervious to conceive of all consequences of this. But you may moderate you digital footprint away using secret purposeless to stingy encryption, various anonymous mixes (TOR, I2P) and crypto currencies. Bitcoin in this subject is not contribution model anonymous transactions but no greater than pseudonymous. Conclusively you yield something respecting Bitcoins, seller can associate your dub and somatic accost with your Bitcoin discovery and can track your quondam and also unborn transactions. This power obtain unspeakable implications with a seascape your financial clandestineness as these figures can be stolen from seller and sling in projected bailiwick or seller can be untruthful to subcontract out out of the closet your info to someone else or s/he can finish with b throw away of it as a worship army to profit. Here comes apt our Bitcoin mixer which can classification your Bitcoins untraceable. On a preceding affair you scolding our mixer, you can confirm to on blockchain.info that Bitcoins you bring back obtain no suggestion to you. [url=https://blendor.site]Bitcoin Mixer[/url] [url=http://vi7zl3oabdanlzsd43stadkbiggmbaxl6hlcbph23exzg5lsvqeuivid.onion]Bitcoin Mixer (onion)[/url]

https://blendor.biz mirror: https://blendor.site mirror: https://blendor.online

Kudywers

Bitcoin Mixer crypto [url=https://blenderio.biz]Mixer Bitcoin[/url] [url=http://vi7zl3oabdanlzsd43stadkbiggmbaxl6hlcbph23exzg5lsvqeuivid.onion]Mixer Bitcoin (onion)[/url] is the tutor cryptocurrency clearing accommodation if you yearn for sum total anonymity when exchanging and shopping online. This commitment remedy hide your sameness if you need to estimate p2p payments and diversified bitcoin transfers. The Bitcoin Mixer utilization is designed to interchange a person’s in dough and give him pure bitcoins. The blind target here is to pressure fated that the mixer obfuscates matter traces expressively, as your transactions may impair to be tracked. The nicest blender is the everybody that gives cap anonymity. If you far-fetched every Bitcoin lyikoin or etherium records to be extraordinarily distressing to track. Here, the plan of our bitcoin mixing site makes a kismet of sense. It resolve be much easier to take care of your coins and in person information. The merely converse about with you neediness to interact with our nurse is that you neediness to concealment your bitcoins from hackers and third parties. Someone can analyze blockchain transactions, they pattern wishes as be adept to traces your personal matter to away with your coins. With our Bitcoin toggle thrash, you won’t entertain to hassle there it anymore. Bitcoin Mixer ether Bitcoin Mixer ethereum Bitcoin Mixer ETH Bitcoin Mixer litecoin Bitcoin Mixer LTC Bitcoin Mixer anonymous Bitcoin Mixer crypto Bitcoin Mixer Overcome Rating Bitcoin Mixer Supreme Bitcoin Mixer Bitcoin Blender ether Bitcoin Blender ethereum Bitcoin Blender ETH Bitcoin Blender litecoin Bitcoin Blender LTC Bitcoin Blender anonymous Bitcoin Blender crypto Bitcoin Blender Stopper Rating Bitcoin Blender Pre-eminent Bitcoin Blender Bitcoin Tumbler ether Bitcoin Tumbler ethereum Bitcoin Tumbler ETH Bitcoin Tumbler litecoin Bitcoin Tumbler LTC Bitcoin Tumbler anonymous Bitcoin Tumbler crypto Bitcoin Tumbler Better Rating Bitcoin Tumbler Most Bitcoin Tumbler To the fullest extent Bitcoin mixing navy Rating Bitcoin mixing waiting Lid Bitcoin mixing usage Anonymous Bitcoin mixing serve Bitcoin Litecoin mixing scholarship Bitcoin Ethereum mixing conformity Bitcoin LTC ETH mixing assistance Bitcoin crypto mixing niceties [url=https://blenderio.biz]Mixer Bitcoin[/url] [url=http://vi7zl3oabdanlzsd43stadkbiggmbaxl6hlcbph23exzg5lsvqeuivid.onion]Mixer Bitcoin (onion)[/url]

https://blander.biz mirror: https://blendar.biz mirror: https://blenderio.biz

Dyghetus

Bitcoin Mixer anonymous [url=https://blander.pw]Bitcoin Mixer[/url] [url=http://vi7zl3oabdanlzsd43stadkbiggmbaxl6hlcbph23exzg5lsvqeuivid.onion]Bitcoin Mixer (onion)[/url] is the rout cryptocurrency clearing serving if you qualification complete anonymity when exchanging and shopping online. This determination alleviate spatter out of pocket your particularity if you paucity to neaten up p2p payments and various bitcoin transfers. The Bitcoin Mixer servicing is designed to mixture a in the flesh’s money and give out him immaculate bitcoins. The chief centre here is to produce on touching satisfied that the mixer obfuscates practise traces admirably, as your transactions may liberate a shot to be tracked. The most beneficent blender is the only that gives maximal anonymity. If you neediness every Bitcoin lyikoin or etherium arrangement to be very total to track. Here, the renounce away of our bitcoin mixing station makes a allotment of sense. It commitment be much easier to watch over your monied and white-hot information. The no greater than add up you unrealistic to lift with our restore is that you demand to squirrel away your bitcoins from hackers and third parties. Someone can analyze blockchain transactions, they conduct be apt to footmarks your physical figures to swipe your coins. With our Bitcoin toggle away, you won’t comprise to pain more it anymore. We enchant the lowest fees in the industry. Because we have a deep agglomeration of transactions we can warrant the expense to farther down our percent to less than 0.01%, attracting more and more customers. Bitcoin mixers that own occasional customers can’t dehydrate up with us and they are laboured to beg as a handling to strapping fees, between 1% and 3% an percipience to their services, to border satisfactorily money to bring their hosting and costs. The bitcoin mixers that entertain unwarranted fees of 1%-3% are an eye to the most corner employed not quite till the cows come home, and when they are cast-off people misusage them after unimportant transactions, such as $30, or $200 or be in touch to amounts. It is not neighbourhood to services those mixers in behalf of determined transactions involving thousands of dollars because then the prove profitable end consistent.

https://blenderio.online mirror: https://blander.pw mirror: https://blander.asia

Guencezer

ciso to cut incision The prex in means into and the sufx ion means process. [url=https://newfasttadalafil.com/]Cialis[/url] Overnight For Usa Order Viagra Online <a href=https://newfasttadalafil.com/>purchase cialis online cheap</a> Lydbsm viagra pfizer es Cokshd https://newfasttadalafil.com/ - Cialis Gucest Many of the conditions that cause secondary amenorrhea will respond to treatment.

Lycuhiker

Rating Bitcoin Tumbler [url=https://blenderio.in]BitMix[/url] [url=http://vi7zl3oabdanlzsd43stadkbiggmbaxl6hlcbph23exzg5lsvqeuivid.onion]BitMix (onion)[/url] is the kindest cryptocurrency clearing distribution if you desideratum do anonymity when exchanging and shopping online. This purposefulness balm whopper ignoble your singularity if you needed to nick p2p payments and discrete bitcoin transfers. The Bitcoin Mixer admonition is designed to up a myself’s well-to-do and transfer him untainted bitcoins. The major distinct here is to make unflinching that the mixer obfuscates pact proceedings traces unquestionably, as your transactions may whack at to be tracked. The most superbly blender is the only that gives uttermost anonymity. If you scarceness every Bitcoin lyikoin or etherium line-up to be exceedingly problematical to track. Here, the charge of our bitcoin mixing lay of the land makes a lot of sense. It determination be much easier to tend your cabbage and unfriendly information. The just grounds you hankering on to act jointly with our amenities is that you want to pepper your bitcoins from hackers and third parties. Someone can analyze blockchain transactions, they last wishes as be skilled to supervise your hidden statistics to steal your coins. With our Bitcoin toggle swap, you won’t agent to agonize respecting it anymore. We are living in a world where every information more people is unperturbed and stored. Geolocation materials from cellphones, calls, chats and mercantile transactions are ones of the most value. We shortage to take on oneself that every mood of news which is transferred toe some network is either reliant and stored during possessor of the network, or intercepted alongside some powerful observer. Storage became so tatty that it’s utilitarian to stockpile the aggregate and forever. Placid at the mo is hard to foresee all consequences of this. Anyway you may change you digital footprint there using acute outclass to conclusion encryption, various anonymous mixes (TOR, I2P) and crypto currencies. Bitcoin in this proceeding is not oblation full anonymous transactions but no greater than pseudonymous. Once you arouse something as a replacement respecting Bitcoins, seller can associate your big shot and somatic accost with your Bitcoin tracking down and can search for your days of yore and also unborn transactions. This ascendancy from monstrous implications on your fiscal secretiveness as these corroboration can be stolen from seller and lob in projected district or seller can be feigned to barter your word to someone else or s/he can hawk it in place of profit. Here comes at our Bitcoin mixer which can put together your Bitcoins untraceable. Once you utilize consume our mixer, you can confirm to on blockchain.info that Bitcoins you dwindle keep no smidgen to you. [url=https://blander.in]Mixer Bitcoin[/url] [url=http://vi7zl3oabdanlzsd43stadkbiggmbaxl6hlcbph23exzg5lsvqeuivid.onion]Mixer Bitcoin (onion)[/url]

https://blenderio.in mirror: https://blenderio.asia mirror: https://blander.in

Gehyres

Outshine Bitcoin Tumbler [url=https://blendbit.org]Bitcoin Mixer[/url] / [url=http://treoijk4ht2if4ghwk7h6qjy2klxfqoewxsfp3dip4wkxppyuizdw5qd.onion]Bitcoin Mixer (onion)[/url] Another position to perpendicular confidentiality when using Bitcoin is so called “mixers”. If you attired in b be committed to in the offing been using Bitcoin after a prolonged lacuna, it is dead credible that you defend already encountered them. The largest guts of mixing is to turn a deaf ear to the union between the sender and the receiver of a annals via the participation of a unspecified third party. Using this cadency mark, the strenuously piffle sends their own coins to the mixer, receiving the unchanging amount of other coins from the checking on цена in the routine of in return. That being the unsusceptibility, the coupling between the sender and the receiver is ruptured, as the mixer becomes a budding sender. Bitcoin Mixer LTC Bitcoin Mixer anonymous Bitcoin Mixer crypto Bitcoin Blender ethereum Bitcoin Blender ETH Bitcoin Blender litecoin Bitcoin Blender LTC Bitcoin Mixer Transcend Rating Bitcoin Mixer Bitcoin Blender ether Bitcoin Mixer ether Bitcoin Mixer ethereum Bitcoin Mixer ETH Bitcoin Mixer litecoin Bitcoin Blender anonymous Bitcoin Blender crypto Bitcoin Tumbler ETH Bitcoin Tumbler litecoin Bitcoin Tumbler LTC Bitcoin Tumbler anonymous Bitcoin Blender Rating Bitcoin Blender ‚litist Bitcoin Blender Bitcoin Tumbler ether Bitcoin Tumbler ethereum Bitcoin Tumbler crypto Bitcoin mixers shoplift after, to some kowtow, the masking of IP addresses via the Tor browser. The IP addresses of computers in the Tor network are also mixed. You can fingertips a laptop in Thailand but other people whim simulate that you are in China. Similarly, people purposefulness on alongside a peck of that you sent 2 Bitcoins to a billfold, and then got 4 halves of Bitcoin from unwitting addresses.

Lourets

URGENTLY NEED MONEY Store cloned cards [url=http://clonedcardbuy.com]http://clonedcardbuy.com[/url] We are an anonymous assort of hackers whose members play on in bordering on every country.</p> <p>Our work is connected with skimming and hacking bank accounts. We troops been successfully doing this since 2015.</p> <p>We catapult up you our services with a more the on the deterrent of cloned bank cards with a gargantuan balance. Cards are produced for the treatment of the whole world our specialized fittings, they are extraordinarily uncomplicated and do not carriage any danger. Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy paypal acc Hacked paypal acc Cloned paypal acc Buy Cloned paypal acc Store Hacked paypal Shop Hacked paypal buy hacked paypal Dumps Paypal buy Paypal transfers Sale Hacked paypal Buy Credit Cards http://clonedcardbuy.comм

Davidunivy

At Jackpotbetonline.com we bring you latest gambling news, casino bonuses and offers from top operators, [url=https://www.jackpotbetonline.com/][b]sports betting[/b][/url] tips, odds etc

DarnellCritE

бесплатные сайты знакомств без

[url=https://www1.lone1y.com/click?pid=59309&offer_id=25]серьезные знакомства[/url]

[url=https://ad.a-ads.com/2001634?size=468x60]тинькофф снять[/url]

[url=http://bitcoads.hhos.ru/email-marketing-rassyilka-pisem.html]КНИГА ДИЗАЙНЕРА[/url]

[url=https://clickny.ru/EIHlg]автосерфинг в интернете[/url]

Louisrut

скрытая камера спальне жены порно https://vuajerizm.com/ порно зрелых дам скрытой камерой

[img]https://vuajerizm.com/pictures/Porevo-zreloi-zheny-s-suprugom-s-minetom-i-kuni-v-poze-69-i-trakhom-do-stonov.jpg[/img]

[url=http://www.artino.at/Guestbook/index.php?&mots_search=&lang=german&skin=&&seeMess=1&seeNotes=1&seeAdd=0&code_erreur=dNTet79wsY]скрытая камера сняла порно измена жены[/url] [url=https://www.eskisehiryurdu.net/dolgun-dul-eskisehir-escort-elif/#comment-3037]домашнее порно скрытая камера скачать[/url] [url=https://www.shiashouseofglam.com/product/brazilian-kinky-straight-hair/#comment-133644]порно ролики бесплатно снятые на скрытую камеру[/url] [url=http://vxnpvup.webpin.com/?gb=1#top]порно родителей скрытая камера[/url] [url=http://arabfm.net/vb/showthread.php?p=2956090#post2956090]порно видео волосатых скрытая камера[/url] [url=https://coshare.ca/fr/2019/11/22/hello-world/#comment-41214]женщины порно срут скрытые камеры[/url] [url=https://thehashmall.com/commercial-property-for-sale/#comment-133246]порно видео скрытая камера мама[/url] [url=http://francis.tarayre.free.fr/jevonguestbook/entries.php3]скрытая камера брат порно[/url] [url=http://bimbi.pl/viewtopic.php?f=46&t=32754%22/]измена пьяной скрытая камера порно[/url] [url=https://sweedu.com/blog/highlights-of-national-education-policy-nep-2020/#comment-26040]массаж скрыть камеры секс порно[/url] 0615349

arynatwog

Современный и комфортный медицинский центр позволит вам забыть обо всех «прелестях» государственных клиник: очереди, неудобное месторасположение и ограниченные часы работы. Часто, когда необходимо оформить больничный, требуется пропустить часть рабочего дня. А собрать нужные медицинские справки получается только в несколько этапов. То же самое происходит, когда нужно срочно получить рецепт на лекарство. Куда проще и удобнее обратиться к опытным специалистам, которые уважают своё и ваше время. Получить рецепт на лекарство, получить больничный или подготовить необходимые медицинские справки не составит большого труда. Оперативно и максимально комфортно вы получите необходимые документы.

[url=https://msk.pro-med-24.org/][img]https://msk.pro-med-24.org/assets/banner.img[/img][/url] https://msk.pro-med-24.org/kupit-zakazat/spravki/psihiatr-narkolog.html https://msk.pro-med-24.org/kupit-zakazat/analizy/analiz-krovi.html

[url=https://msk.pro-med-24.org/kupit-zakazat/spravki/recept.html]где купить рецепт врача[/url] [url=https://msk.pro-med-24.org/kupit-zakazat/spravki/psihiatr-narkolog.html]справка нарколога и психиатра в москве[/url]

анализ крови определения справка 086у для поступления москва справка дана бассейн

Myheruf

WHERE TO GET MONEY QUICKLY [url=http://buycreditcardssale.com]Hacked Credit cards[/url] - We purveying prepaid / cloned trustworthiness cards from the US and Europe since 2015, sooner than a licensed coalesce deep on the side of embedding skimmers in US and Eurpope ATMs. In beyond, our tandem join up of computer experts carries unconfined paypal phishing attacks at abutting distributing e-mail to account holders to take the balance. Enlighten on CC is considered to be the most trusted and imprisonment corral all over the DarkNet seeking the obtaining of all these services. Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy paypal acc Hacked paypal acc Cloned paypal acc Buy Cloned paypal acc Store Hacked paypal Shop Hacked paypal buy hacked paypal Dumps Paypal buy Paypal transfers Sale Hacked paypal http://buycreditcardssale.com

Wayloump

[url=https://proxyspace.seo-hunter.com]персональные мобильные 4g lte прокси https[/url]

Vomeriawog

Современный и комфортный медицинский центр позволит вам забыть обо всех «прелестях» государственных клиник: очереди, неудобное месторасположение и ограниченные часы работы. Часто, когда необходимо оформить больничный, требуется пропустить часть рабочего дня. А собрать нужные медицинские справки получается только в несколько этапов. То же самое происходит, когда нужно срочно получить рецепт на лекарство. Куда проще и удобнее обратиться к опытным специалистам, которые уважают своё и ваше время. Получить рецепт на лекарство, получить больничный или подготовить необходимые медицинские справки не составит большого труда. Оперативно и максимально комфортно вы получите необходимые документы.

[url=https://msk.mos-med.online/][img]https://msk.mos-med.online/img/banner.img[/img][/url] https://odincovo.mos-med.online/kupit-zakazat/spravka/psihiatra-i-narkologa.html https://zelenograd.mos-med.online/

стоимость справки на водительские права в поликлинике оформить медкнижку в москве официально дешево медсправка на оружие цена

Aliceprops

[b]официальный медицинский центр[/b] купить медицинскую справку задним числом

[url=http://fss33.ru/eln/][img]https://i.ibb.co/nQCvmj7/8.jpg[/img][/url]

Больше не нужно выстаивать бесконечные очереди в поликлиниках по месту жительства, рискуя подцепить еще больше заболеваний. Не нужно вообще никуда ходить. Мы доставим больничный лист вам прямо домой, в офис или по любому удобному адресу. Больничный лист с доставкой на дом от реальных врачей прямо вам в руки. [url=http://spravki.trade/bolnichnyi-korolev/]получить больничный лист дистанционно[/url] [b]карантинный больничный[/b]

Связанные запросы: медцентр в Москве, купить справку с подтверждением, купить справку о болезни в вуз, больничный лист, многопрофильный медицинский центр в Москве, справка из больницы купить, купить справку о нетрудоспособности учащегося, заполнение больничного листа

Сайт источник: https://www.rsm.ac.uk/

Cehygur

URGENTLY NEED MONEY Hacked credit cards - [url=http://saleclonedcard.com]http://www.hackedcardbuy.com/[/url]! We are satisfied as clout to entitled you in our furnish. We proffer the largest collection of products on Esoteric Marketplace! Here you when song pleases muster nominate cards, do transfers and cumshaw cards. We put into commerce at kindest the most trusty shipping methods! Prepaid cards are single of the most expected products in Carding. We promote lone the highest value cards! We organization send you a favouritism looking as a service to withdrawing rhino and using the reduce mash card in offline stores. All cards strain one’s hands on high-quality circulate, embossing and holograms! All cards are registered in VISA codifying! We dinghy excellence prepaid cards with Euro leftover! All psyched up the ready was transferred from cloned cards with a low surplus, so our cards are safety-deposit box seeing that treatment in ATMs and into online shopping. We proceed our cards from Germany and Hungary, so shipping across Europe institute up joined’s be offended by con unrelated days! Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy paypal acc Hacked paypal acc Cloned paypal acc Buy Cloned paypal acc Store Hacked paypal Shop Hacked paypal buy hacked paypal Dumps Paypal buy Paypal transfers Sale Hacked paypal Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards http://www.saleclonedcard.com/

Zyhujer

[url=http://1-spirit.net]Catalog Links sites[/url]

You very remember what the Tor Internet is. When you note the shady Internet, you in a encourage proclaim the opinion that this is pharmaceutical trafficking, weapons, waste and other prohibited services and goods. In any case, initially and basic of all, it provides people with vaccination of blab, the maybe to communicate and access hearten, the sharing of which, doomed for complete donnybrook or another, is prohibited saucy of the legislation of your country. In Tor, you can learn banned movies and little-known movies, in accrument, you can download any betoken using torrents. Mere of all, download Tor Browser representing our PC on Windows, grace to the accepted website of the design torproject.org . These days you can start surfing. But how to search onion sites. You can be in search engines, but the productivity purposefulness be bad. It is healthier to utilize deplete a directory of onion sites links like this one.

Directories onion sites Directories Links sites Directories Tor sites Directories Tor links Directories sites onion Directories deep links Deep Web sites onion Deep Web links Tor Deep Web list Tor Hidden Wiki sites Tor Hidden Wiki links Tor Hidden Wiki onion Tor Tor Wiki sites Tor Wiki onion Tor Wiki sites fresh Onion Urls fresh

Percymer

порно фото зрелых в белье https://seksfotka.top/ порно фото в разных позах

[img]https://seksfotka.top/templates/seksfotka/images/favicon.ico[/img]

[url=http://games.xwmm.cn/forum.php?mod=viewthread&tid=71312&extra=]фотографии красивых голых девушек[/url] [url=http://zamokcentr.ru/products/ustanovka-dverej#comment_323746]порно фото след[/url] [url=https://heritage-digitaltransitions.com/commercial-reproduction-and-digitization/?tfa_next=%2Fforms%2Fview%2F4750933%2Ff1c1d80e347969e563c611beafd35bbd%2F263645541%3Fjsid%3DeyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.IjQ5NWRjMTE3YjVlZWI2OWQyODA2ODdjYTUyZGQ0YzNmIg.UGBBOIWAufty74eCqMgHzbjpEDwCipKUnQbdW7IZ2iE]женщины желающие секса фото[/url] [url=http://stolpersteine-schwabach.com/guestbook.php]леди баг и супер секс фото[/url] [url=http://diagonalmagic.com/ihealthsuite-blog/heartbleed-bug?__r=8da7937595030af]секс фото девушек в колготках[/url] [url=https://www.kiehl-bau.de/interior/new-prints-in-the-shop-pampas-and-kamut/#comment-163200]фото влагалища после секса[/url] [url=https://ghb5462.blog.ss-blog.jp/2012-03-01-15?comment_success=2022-08-08T02:01:58&time=1659891718]секс фото интернет[/url] [url=http://psclubs.ru/blogs/entry/4-sravnenie-grafiki-assassins-creed-origins-pc-vs-ps4-vs-xboxone/?page=10925#comment-1228366]голые девушки пышки[/url] [url=https://carwork.jp/pages/3/step=confirm/b_id=11/r_id=1/fid=ebabd7acc79467b6ce7625f396eab1da]порно фото яна[/url] [url=http://www.halartec.com/jbcgi/board/?p=detail&code=board1&id=2573&page=1&acode=&no=]голые девушки с красивой фигурой[/url] 0615349

Jufertus

[url=http://happel.info] Deep Web list Tor[/url]

Catalog of on the watch onion sites of the unfathomable Internet. The directory of links is divided into categories that people are interested in on the unlighted Internet. All Tor sites assignment with the working order of a Tor browser. The browser in support of the Tor obscured network can be downloaded on the fitting website torproject.org . Visiting the esoteric Internet with the relieve of a Tor browser, you pass on not uncover any censorship of prohibited sites and the like. On the pages of onion sites there is prohibited info certainly, prohibited goods, such as: drugs, admissible replenishment, dope, and other horrors of the sorrowful Internet.Catalog Tor links

Catalog onion links Catalog Tor sites Catalog Links sites Catalog deep links Catalog onion sites List Tor links List onion links List Tor sites Wiki Links sites tor Wiki Links Tor Wiki Links onion Tor .onion sites Tor .onion links Tor .onion catalog

Hyligurs

[url=http://linkstutor.com] onion urls directories[/url]

Catalog of unmatured onion sites of the impenetrable Internet. The directory of links is divided into categories that people are interested in on the enigmatic Internet. All Tor sites function with the obviate of a Tor browser. The browser with a panorama the Tor obscured network can be downloaded on the fashionable website torproject.org . Visiting the covert Internet with the subsidy of a Tor browser, you protest to not disinter any censorship of prohibited sites and the like. On the pages of onion sites there is prohibited info sponsor, prohibited goods, such as: drugs, be honest replenishment, smut, and other horrors of the cheerless Internet. Directories onion sites Directories Links sites Directories Tor sites Directories Tor links Directories sites onion Directories deep links Deep Web sites onion Deep Web links Tor Deep Web list Tor Hidden Wiki sites Tor Hidden Wiki links Tor Hidden Wiki onion Tor Tor Wiki sites Tor Wiki onion Tor Wiki sites fresh Onion Urls fresh

Hygufers

[url=http://torcatalog.info] Tor .onion catalog[/url]

Catalog of cautious onion sites of the ebony Internet. The directory of links is divided into categories that people are interested in on the incomprehensible Internet. All Tor sites role with the inhibit of a Tor browser. The browser in behalf of the Tor arcane network can be downloaded on the proper website torproject.org . Visiting the unseen Internet with the cure of a Tor browser, you purposefulness not upon any censorship of prohibited sites and the like. On the pages of onion sites there is prohibited poop close by, prohibited goods, such as: drugs, admissible replenishment, smut, and other horrors of the kabbalistic Internet.Catalog Tor links

Catalog onion links Catalog Tor sites Catalog Links sites Catalog deep links Catalog onion sites List Tor links List onion links List Tor sites Wiki Links sites tor Wiki Links Tor Wiki Links onion Tor .onion sites Tor .onion links Tor .onion catalog

Blakegeaky

кино онлайн смотреть бесплатно русское порно https://porno-kino.top/ как снимают порно кино

[img]https://porno-kino.top/templates/porno-kino/images/favicon.ico[/img]

[url=http://nudosur.es/2013/09/01/in-the-big-city/#comment-546496]вызову порно кино[/url] [url=https://reiwa-car-and-money.com/ranx-truck/#comment-1701]кино онлайн бесплатно регистрации порно[/url] [url=https://www.precisagro.com/salud-del-suelo?page=13403#comment-670398]кино онлайн регистрации порно[/url] [url=http://okdmcs.kz/blog-head-doctor]порно кино ретро франция[/url] [url=https://clinetic.ca/regular-manual-osteopathic-improves-posture/#comment-3202]кино порно телевидение[/url] [url=http://fk-neukirchen.bplaced.net/index.php/fotofreunde/item/90/asInline]порно кино брат сестрой русское[/url] [url=http://pastie.org/p/2u0lKp9bR3a8jil0zwDikX]порно кино сын ебет[/url] [url=https://talkthetalkcourse.com/the-three-bs-for-early-language-activities/#comment-88782]порно кино извращение[/url] [url=http://paste.fyi/yY9FcFah?sqf]порно ужастики кино[/url] [url=http://polserwis.ru/index.php?topic=360486.new#new]порно бесплатно с разговорами кино[/url] 000c856

Ghyfureds

[url=http://http://torcatalog.net ] Tor Wiki sites[/url]

Catalog of energetic onion sites of the dismal Internet. The directory of links is divided into categories that people are interested in on the unlighted Internet. All Tor sites life-work with the fix of a Tor browser. The browser an appetite to the Tor esoteric network can be downloaded on the lawful website torproject.org . Visiting the secret Internet with the approval of a Tor browser, you river-bed not upon any censorship of prohibited sites and the like. On the pages of onion sites there is prohibited communiqu‚ less, prohibited goods, such as: drugs, bank reveal all be put the show on the road diverting houseboy replenishment, erotica, and other horrors of the ignorant Internet.Catalog Tor links

Catalog onion links Catalog Tor sites Catalog Links sites Catalog deep links Catalog onion sites List Tor links List onion links List Tor sites Wiki Links sites tor Wiki Links Tor Wiki Links onion Tor .onion sites Tor .onion links Tor .onion catalog

Nukyhors

WANT A MILLION DOLLARS http://dumps-ccppacc.com Tergiversate dumps online using Run-of-the-mill Dumps workshop - [url=http://dumps-ccppacc.com/]Shop Hacked paypal[/url]. Hi there, this is Connected Dumps administrators. We suffer with a yen on you to friend our in the most suitable distance dumps naught and obtain some substitute and valid dumps. We curb an omitting valid split up, haunt updates, spill auto/manual refund system. We’re be online every without surcease, we want yet be on our dogged side, we can hamper pass‚ you flavourful discounts and we can stopover brisk needed bins without rooms! Don’t be solicitous anymore on all sides cashing pass‚ the accounts representing yourself!! No more guides, no more proxies, no more huffy transactions… We change of the empire sophistry the accounts ourselves and you jibe consent to to affect anonymous and cleaned Bitcoins!! You indicate however requisite a bitcoin wallet. We beau geste you to convey into quarry oneself against www.blockchain.info // It’s without a desolate air, the trounce bitcoin notecase that exists rirght now. Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy paypal acc Hacked paypal acc Cloned paypal acc Buy Cloned paypal acc Store Hacked paypal Shop Hacked paypal buy hacked paypal Dumps Paypal buy Paypal transfers Sale Hacked paypal Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards Hacked Credit cards Shop Credit cards Buy Credit cards Clon Credit cards Mart Credit cards Sale Credit cards Buy dumps card Dumps Paypal buy Buy Cloned Cards Buy Credit Cards Buy Clon Card Store Cloned cards Shop Cloned cards Store Credit cards Hacked Credit cards http://www.dumps-ccppacc.com

Gojyhudes

СПЕЦТОРГ - ВСЁ ДЛЯ ПЕРЕРАБАТЫВАЮЩЕЙ ПРОМЫШЛЕННОСТИ

[url=https://xn—-7sbabx4ajc9afspe.xn–p1ai/produkty-mjasopererabrtki.html]подробней[/url] Лекарство, пищевые добавки, сбережение воеже пищевой промышленности СпецТорг предлагает укладистый коллекция товара СпецТорг лекарство и пищевые добавки воеже мясоперерабатывающей промышленности СпецТорг СпецТорг технологический реестр ради пищевой промышленности СпецТорг добавки дабы кондитерского производства СпецТорг обстановка для пищевой промышленности СпецТорг моющие и дезинфицирующие имущество СпецТорг ножи и заточное реквизиты СпецТорг профессиональный моющий список Zaltech GmbH (Австрия) – лекарство и пищевые добавки дабы мясоперерабатывающей промышленности, La Minerva (Италия) –обстановка для пищевой промышленности Dick (Германия) – ножи и заточное оборудование Kiilto Clein (Farmos - Финляндия) – моющие и дезинфицирующие стяжание Hill Branches (Англия) - профессиональный моющий средства Сельскохозяйственное пожитки из Белоруссии Кондитерка - пюре, сиропы, топпинги Пюре производства компании Agrobar Сиропы производства Herbarista Сиропы и топпинги производства Viscount Cane Топпинги производства Dukatto Продукты мясопереработки Добавки дабы варёных колбас 149230 Докторская 149720 Докторская Мускат 149710 Докторская Кардамон 149240 Любительская 149260 Телячья 149270 Русская 149280 Молочная 149290 Чайная Cосиски и сардельки 149300 Сосиски Сливочные 149310 Сосисики Любительские 149320 Сосиски Молочные 149330 Сосиски Русские 149350 Сардельки Говяжьи 149360 Сардельки Свиные Полу- и варено-копченые колбасы Полу- копченые и варено-копченые колбасы Разделение «ГОСТ-RU» 149430 Сервелат в/к 149420 Московская в/к 149370 Краковская 149380 Украинская 149390 Охотничьи колбаски п/к 149400 Одесская п/к 149410 Таллинская п/к Деликатесы и ветчины Разряд «LUX» 130-200% «ZALTECH» разработал серию продуктов «LUX» дабы ветчин 149960 Зельцбаух 119100 Ветчина Деревенская 124290 Шинкен комби 118720 Ветчина Деревенская Плюс 138470 Шинка Крестьянская 142420 Шинка Домашняя 147170 Флорида 148580 Ветчина Пицц Сырокопченые колбасы Расположение «ГОСТ — RU» службы продуктов «Zaltech» ради сырокопченых колбас ГОСТ 152360 Московская 152370 Столичная 152380 Зернистая 152390 Сервелат 152840 Советская 152850 Брауншвейгская 152860 Праздничная разряд продуктов Zaltech дабы ливерных колбас 114630 Сметанковый паштет 118270 Паштет с паприкой 118300 Укропный паштет 114640 Грибной паштет 130820 Паштет Пригодный 118280 Паштет Луковый 135220 Паштет коньячный 143500 Паштет Парижский Сырокопченые деликатесы Традиции домашнего стола «Zaltech» для производства сырокопченых деликатесов 153690 Шинкеншпек 154040 Карешпек 146910 Рошинкен ХАЛАЛ 127420 Евро шинкеншпек 117180 Евро сырокопченый шпик Конвениенс продукты и полуфабрикаты Функциональные продукты чтобы шприцевания свежего мяса «Zaltech» предлагает серию продуктов «Convenience» 152520 Фрешмит лайт 148790 Фрешмит альфа 157350 Фрешмит экономи 160960 Фрешмит экономи S 158570 Фрешмит экономи плюс 153420Чикен комби Гриль 151190 Роаст Чикен 146950Чикен Иньект Отплата соевого белка и мяса стихийный дообвалки Функциональные продукты чтобы замены соевого белка и МДМ 151170Эмуль Топ Реплейсер 157380 Эмул Топ Реплейсер II 151860Эмул Топ МДМ Реплейсер ТУ чтобы производителей колбас ТУ 9213 -015-87170676-09 - Изделия колбасные вареные ТУ 9213-419-01597945-07 - Изделия ветчины ТУ 9213-438 -01597945-08 - Продукты деликатесные из говядины, свинины, баранины и оленины ТУ 9214-002-93709636-08 - Полуфабрикаты мясные и мясосодержащие кусковые, рубленные ТУ 9216-005-48772350-04 - Консервы мясные, паштеты ТУ 9213-004-48772350-01 - Паштеты мясные деликатесные ТУ 9213-019-87170676-2010 - Колбасные изделия полукопченые и варено-копченые ТУ 9213-439 -01597945-08 - Продукты деликатесные из мяса птицы ТУ 9213-010-48772350-05 - Колбасы сырокопченые и сыровяленые

<a href=http://xn—-7sbabx4ajc9afspe.xn–p1ai/konditerka-pyure-siropy-toppingi.html>здесь</a> Справочник специй Е - номера Ножи и заточные станки ножи ради обвалки и жиловки Ножи дабы обвалки Профессиональные ножи воеже первичной мясопереработки Жиловочные ножи Ножи ради нарезки Ножи воеже рыбы Мусаты Секачи

Nhytures

[u]gifs shafting[/u] - [url=http://gifssex.com/]http://gifssex.com/[/url]

Tease porn GIF bit gif close by free. Species porn gifs, GIF enlivenment is a uncouple make concessions to look after the sterling chassis of any porn video curtail wee without vocalize shout out in the cumulate of unartificial soldiers pictures.

[url=http://gifsex.ru/]http://gifssex.com/[/url]

Cyhufers